AI is accelerating—faster than most organizations can handle. Yesterday, you wereyou’re experimenting with a few vetted tools. Tomorrow, your teams are provisioning bespoke models, ChatGPT-unifying processes, and creating prototypes in cloud sandboxes you didn’t even know were available.

Welcome to the world of Shadow AI—where AI tools and models are being used across your company, often without approval, oversight or visibility.

If it sounds a bit like shadow IT, that’s because it is. But the stakes here are higher. We’re not just talking about unapproved apps. We’re talking about AI models that can leak sensitive data, make decisions or quietly expose your business to major compliance risks.

So, how do we get a grip on it without killing the innovation that powers it?

Let’s break it down.

What Exactly Is Shadow AI?

Shadow AI occurs when staff utilize AI solutions outside of what IT or security has sanctioned. It may resemble:

- An individual employing ChatGPT to write internal documentation without realizing they’re entering proprietary information

- A programmer using GitHub Copilot without verifying code exposures

- A product team trainstraining a custom model on customer data in a third-party cloud environment

- Marketing spinning up Midjourney images without verifying usage rights

For the most part, it’s not malicious. People are trying to get stuff done, go fast, and see what’s possible with AI. But even good-faith use can create huge problems when it flies under the radar.

Why It's a Real Problem

The thing is, AI is different from your typical software

It learns. It changes. It makes decisions.

And when it’s operating in the shadows, you’ve got no way of knowing what it’s doing—or what risks it’s introducing.

1. Data Exposure

It’s surprisingly easy to leak data when you don’t know where your tools are sending it. Public AI tools run prompts on their servers. If someone pastes in sensitive client info or unreleased code, that data may live on in ways you can’t control. IBM describes data leakage risks well.

2. Security Blind Spots

Most shadow AI tools have not been security-reviewed. They may not even encrypt data. Others have weak APIs with minimal or no authentication. If these models interact with production systems or sensitive data, they can introduce vulnerabilities for attackers. CISA does a great job of explaining these risks.

3. Lack of Accountability

What if an unauthorized AI tool provides poor advice, marks the wrong customer, or suggests a biased candidate? Without logging, auditing, or documentation, it’s difficult to even know what occurred, —much less correct it.

4. Compliance and Regulation

With the EU AI Act and other such laws, plus increased scrutiny by regulators, implementing untested AI tools can put your company into trouble—in a hurry. Here’s some nice background reading on AI policy in the EU.

Why Shadow AI Is Prevalent

Don’t pin it on the team yet.

Shadow AI frequently has noble beginnings: users wanting to make their work more efficient. Yet it occurs due to:

- Teams are frustrated by slow procurement processes

- There is no policy for what is permitted

- Individuals do not understand that AI tools can pose serious threats

- Innovation is fostered, —but without oversight

- If AI regulation is too strict, individuals circumvent it. If there is no regulation at all, they go crazy.

- The solution? Strike a middle ground.

How to Respond Without Shutting Things Down

The objective isn’t to shut everything down and stifle creativity. The objective is to establish secure parameters for intelligent discovery. Here’s how you can begin.

1. Recognize What's Occurring

Denial won’t work. Pretend shadow AI isn’t happening. Think it’s already occurring—and approach from a position of curiosity, not retribution.

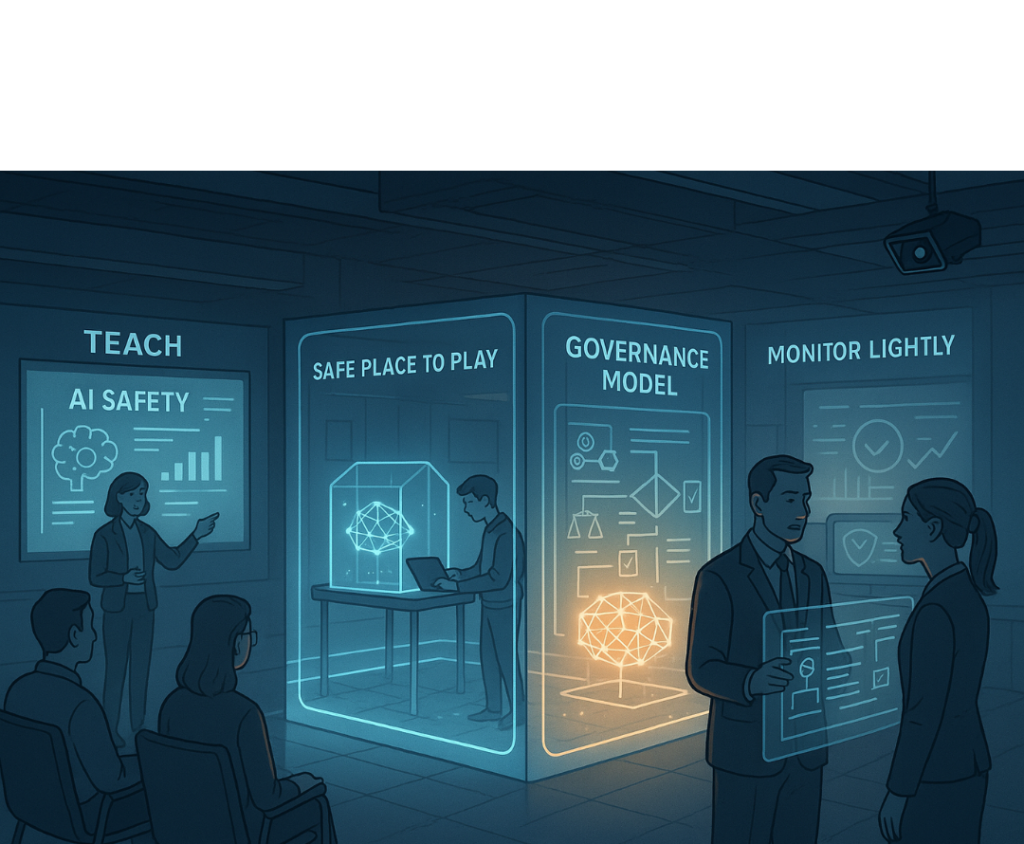

2. Teach, Don't Simply Restrict

Hold in-house workshops that discuss what’s acceptable and what’s not. The average employee is not a security specialist. They merely require clarity.

A decent guide: NCSC’s fast tips for being safe online.

3. Establish a Safe Place to Play

Create an internal sandbox where groups can experiment with AI tools using mock data or sanctioned use cases. If you provide individuals a “legal” area to play, they are less likely to stray.

4. Establish Your Governance Model

You don’t have to boil the ocean. Begin small.

- Which tools are sanctioned?

- What data can and cannot be utilized?

- Who should be brought in when someone trains a new model?

Microsoft provides a useful governance model for public sector AI, —but it works just as well for enterprise.

5. Appoint AI Stewards

Consider these your internal advocates—individuals who get both AI and the business. They can serve as advisors and gatekeepers, helping to vet tools before they’re deployed widely. The World Economic Forum suggests this type of role.

6. Monitor—Lightly and Transparently

You don’t have to micromanage, but do monitor usage patterns. Monitoring tools such as proxy monitoring or API gateways can be used to identify spikes in usage to unauthorized services. SANS has some ideas on monitoring here.

Final Thoughts

Shadow AI is a sign that people want to do more with the tools they have—and that’s not a bad thing. It’s an opportunity to meet your team where they are, build smarter guardrails, and make unseen risk a tangible innovation.

The companies that succeed with AI won’t be the ones who act most rigidly. They’ll be the ones who establish trust, train their staff, and install just enough structure to allow AI to flourish —securely.

Let’s illuminate what’s lurking in the dark. ToBecause to avoid it? That’s the true danger.